Mesos Executor

Apache Mesos is an open-source project to manage computer clusters. In this article we present one of its components called Executor and more specifically the Custom Executor. We also tell you why you should consider writing your own executor by giving examples of features that you can benefit from by taking more control over how tasks are executed. Our executor implementation is available at github.com/allegro/mesos-executor

TL;DR Apache Mesos is a great tool but in order to build a truly cloud native platform for microservices you need to get your hands dirty and write custom integrations with your ecosystem.

If you are familiar with Apache Mesos, skip introduction

Apache Mesos #

Apache Mesos is a tool to abstract your data center resources. In contrast to many container orchestrators such as Kubernetes, Nomad or Swarm, Mesos makes use of two-level scheduling. This means Mesos is responsible only for offering resources to higher level applications called frameworks or schedulers. It’s up to a framework if it accepts an offer or declines it.

Mesos development originates from Berkeley University. The idea was not totally new. Google already had a similar system called Borg for some time. Rapid development of Mesos started when one of its creators – Ben Hindman gave a talk at Twitter presenting Mesos – then development got a boost. Twitter employed Ben and decided to use Mesos as a resource management system and to build a dedicated framework – Aurora. Both projects were donated to Apache foundation and became top-level projects.

Currently Mesos is used by many companies including Allegro, Apple, Twitter, Alibaba and others. At Allegro we started using Mesos and Marathon in 2015. At that time there were no mature and battle-proven competitive solutions.

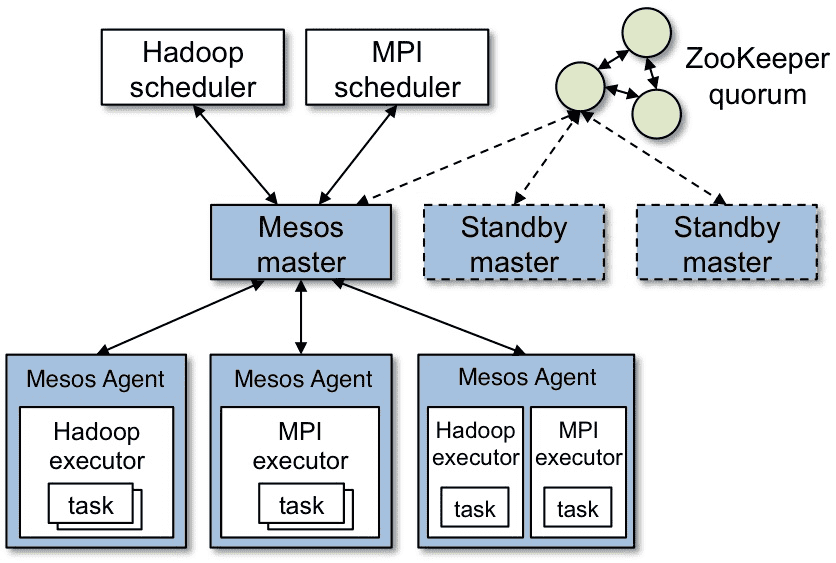

Mesos Architecture #

A Mesos Cluster is built out of three main elements: Masters, Agents and Frameworks.

Masters #

A Mesos Master is the brain of the whole datacenter. It controls the state of a whole cluster. It receives resource information from agents and presents those resources to a framework as an offer. The Master is a communication hub between a framework and its tasks. At any given time only one Master is acting as a leader, other instances are in a standby mode. Zookeeper is used to elect this leader and notifies all instances when the leader abdicates.

Agents #

Agents are responsible for running tasks, monitoring their state and present resources to the master. They also receive launch and kill task requests. Agents notify the Master when a task changes its state (for example fails).

Frameworks #

Frameworks (aka schedulers) are not a part of Mesos. They are custom implementation of business logic. The best known frameworks are container orchestrators such as Google Kubernetes, Mesosphere Marathon and Apache Aurora. Frameworks receive resource offers from the Master and based on custom scheduling logic decide whether to launch a task with given resources. There could be multiple frameworks running at once. Each of them can have a different scheduling policy and a different purpose. This gives Mesos the ability to maximize resource usage by sharing same resources between different workloads. For example: batch jobs (e.g. Hadoop, Spark), stateless services, continuous integration (e.g., Jenkins), databases and caches (e.g., Arango, Cassandra, Redis).

Beside those big elements, there are also smaller parts of the whole architecture. One of them are executors.

Executor #

An executor is a process that is launched on agent nodes to run the framework’s tasks. Executor is started by a Mesos Agent and subscribes to it to get tasks to launch. A single executor can launch multiple tasks. Communication between executors and agents is via Mesos HTTP API. Executor notifies an Agent about task state by sending task status information. Then, in turn, the Agent notifies the framework about the task state change. The Executor can perform health checks and any other operations required by a framework to run a task.

There are four types of executors:

- Command Executor – Speaks V0 API and is capable of running only a single task.

- Docker Executor – Similar to command executor but launches a docker container instead of a command.

- Default Executor – Introduced in Mesos 1.0 release. Similar to command executor but speaks V1 API and is capable of running pods (aka task groups).

- Custom Executor – Above executors are built into Mesos. A custom executor is written by a user to handle custom workloads. It can use V0 or V1 API and can run single or multiple tasks depending on implementation. In this article we are focusing on our custom implementation and what we achieved with it.

</div></div>

Why do we need a custom executor? #

At Allegro we are using Mesos and Marathon as a platform to deploy our microservices. Currently we have nearly 600 services running on Mesos. For service discovery we are using Consul. Some services are exposed to the public and some are hidden by load balancers (F5, HAProxy, Nginx) or cache (Varnish).

At Allegro we try to follow the 12 Factor App Manifesto. This document defines how a cloud native application should behave and interact with environments they run on in order to be deployable in a cloud environment. Below we present how we achieve 3 out of 12 factors with the custom executor.

Allegro Mesos Executor #

III. Config – Store config in the environment #

With a custom executor we are able to place app’s configuration in its environment. Executor is not required in this process and could be replaced with Mesos Modules but it’s easier for us to maintain our Executor than a module (a C++ shared library). Configuration is kept in our Configuration Service backed by Vault. When the executor starts, it connects to configuration service and downloads configuration for a specified application. Config Service stores encrypted configurations. Authentication, by necessity, is performed with a certificate generated by a dedicated mesos module that was written years ago and convinced us we do not want to keep any logic there. The certificate is signed by an internal authority. Pictures below presents how communication looks in our installation. Mesos Agent obtains signed certificate from CA (certificate authority) and passes it to the executor in an environment variable. Previously, every application contained dedicated logic for reading this certificate and downloading its configuration from Configuration Service. We replaced this logic with our executor that, by using the certificate to authenticate, is able to download the decrypted configuration and pass it in environment variables to the task that is launched.

IX. Disposability – Maximize robustness with fast startup and graceful shutdown #

Processes shut down gracefully when they receive a SIGTERM signal from the process manager.

Although the Mesos Command Executor supports graceful shutdown in configuration it does not work properly with shell commands (see MESOS-6933).

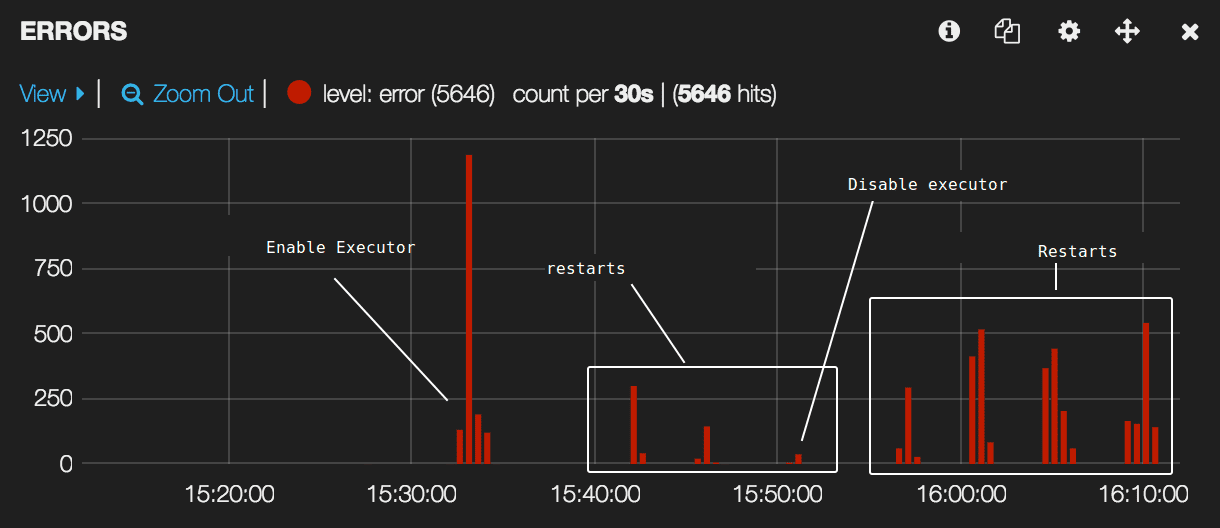

Above diagram presents the life cycle of a typical task. At the beginning its binaries are fetched and the executor is started (1). After starting, the executor can run some hooks (for example to load configuration from configuration service) then it starts the task and immediately starts health checking it (2). Our applications are plugged into discovery service (Consul), load balancers (F5, Nginx, HAProxy) and caches (Varnish) when they start answering the health check (3). When the instance is killed, it is first unregistered from all services (4) then SIGTERM is sent and finally (if the instance is still running) it receives a SIGKILL (5). This approach gives us nearly no downtime at deployment and could not be achieved without a custom executor. Below you can see a comparison of a sample application launched and restarted with and without our executor’s graceful shutdown feature. We deployed this application with our executor at 15:33 (first peak) and restarted it 3 times (there are some errors but fewer then before). Then we rolled back to the default command executor and restarted it a couple of times (more errors). There are errors due to missing cache warmup at start, but we see a huge reduction of errors during deployments.

What’s more, with this approach we can notify the user that an external service errored using Task State Reason, so details are visible for the end user. Accidentally by implementing custom health checks and notifications we avoided MESOS-6790.

Previous solution (called Marathon-Consul) was based on Marathon Events. Marathon-Consul was consuming events about task starting and killing which register or deregister the instance from Consul. This caused a delay, instance was deregistered when it was already gone. With Executor we can guarantee that application will not get any traffic before we shut it down.

XI. Logs – Treat logs as event streams #

Command executor redirects standard streams to stdout/stderr files. In our case we want to push logs into ELK stack. In Task definition we have all metadata about task (host, name, id, etc.) so we can enhance the log line and push it further. Logs are generated in key-value format that is easy to parse and read by both human and machine. This allow us to easily index fields and to reduce the cost of parsing JSONs by the application. Whole integration is done by the executor.

What could be improved #

Mesos provides an old-fashioned way to extend its functionality – we have to write our own shared library in C++ (see Mesos Modules for more information). This solution has its advantages, but it also significantly increases the time needed for development and enforces the use of technology that is not currently used at our company. Additionally, errors in our code could propagate to the Mesos agent, possibly causing it to crash. We do not want to go back to the times of segmentation fault errors causing service failures. A more modern solution based on Google Protobuf (already used by Mesos), would be appreciated. Finally upgrading Mesos often required us to recompile all modules, thus maintaining different binaries for different Mesos versions

A lot of solutions for Mesos maintain their own executors because other integration methods are not as flexible, for example:

-

Apache Aurora

-

Singularity

-

go-mesos-executor

We also made a mistake by putting our service integration logic directly into the executor binary. As a result, the integration code became tightly coupled with Mesos specific code, making it significantly more difficult to use it in other places – e.g. Kubernetes. We are in the process of separating this code into stand-alone binaries.

Comparison with other solutions (read K8s) #

Kubernetes provides a more convenient way to integrate your services with it. Container Lifecycle Hooks allow you to define an executable or HTTP endpoint which will be called during lifecycle events of the container. This approach allows the use of any technology to create integration with other services and reduces the risk of tight coupling the code with a specific platform. In addition, we have a built-in graceful shutdown mechanism integrated with the hooks, which ultimately eliminates the need to have the equivalent of our custom Mesos executor on this platform.

Conclusion #

Mesos is totally customizable and able to handle all edge cases but sometimes it’s really expensive in terms of time, maintainability and stability. Sometimes it’s better to use solutions that work out of the box instead of reinventing the wheel.

</div></div>